Parallel world of OpenCV (HaarTraining)

Posted on : 03-06-2009 | By : rhondasw | In : OpenCV

26

If you want to generate cascade with OpenCV training tools, you should be ready for waiting plenty of time. For example, on training set: 3000 positive / 5000 negative, it takes about 6 days! to get cascade for face detection. I wanted to generate many cascades with different training sets, also I added my own features to standart OpenCV’s ones and refactor algorithms a little bit. So waiting for 6 days to understand, that your cascade does nothing good =) was really anoying. To reduce time, I chose paralleling methods.

OpenMP.

In OpenCV code supports OpenMP. OpenMP is library, which allows to run program in several threads. All this makes sence, if you have appropriate processor like Intel Core Duo or with Hyper Threading support at least.

The advantage of this method is that, it’s already implemented and, I really believe, debugged in OpenCV. OpenMP will speed up cascade generation – 4 days instead of 6 on my Intel Core2 1.8GHZ 2GB.

MPI.

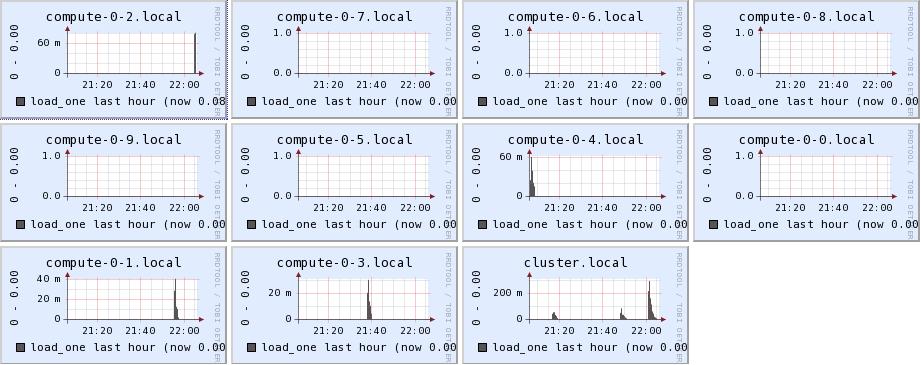

We constructed Linux-based cluster from 11 machines with configuration: 2.7GHZ processor with 2GB RAM. Computers are linked via 100 Ethernet LAN.

OpenCV internal data structures are matrix and vectors – really good for paralleling. So we decided to add MPI API calls to places with OpenMP defines – so just clone OpenMP schemas, who knows why we did so =) – hurry I suppose. In this way we commonly paralleled loops. But MPI does not have shared memory, unlike OpenMP, so data(MBs of traffic) synchronization time over Ethernet LAN brought computation speed-ups to nothing. We understood, that for MPI we needed parallel schema, in which data synchronization would be small.

First, I wanted to investigate, what functions take most of all time – printf profiling in cvCreateTreeCascadeClassifier helped me. And what do you think? Function icvGetHaarTrainingDataFromBG is hero of the occasion – computation time was 9 hours on 11th cascade stage! Unlike it, icvGetHaarTrainingDataFromVec took about 10 minutes. The matter is that, positive samples are resized to 20×20, when make training vec file and each picture is just run through cascade. Negative samples have original resolution and it’s various, that’s why each picture is scanned with scalling 20×20 window to find false-positive, like haardetect does. The process is stopped, when we have required number of false-positive pictures. To reduce time, we needed to parallel icvGetHaarTrainingDataFromBG, but avoiding large data synchronization.

icvGetHaarTrainingDataFromBG works in such way:

- it gets negative samples

- found false positive until required number is reached

- return false-positives

If we shuffle negative samples and then call icvGetHaarTrainingDataFromBG, what will happen? Anything bad? In output we will have another false-positive pictures, but algorithm in whole will work correctly and generate right cascade. So we decided to split negative samples into 11 parts(11 machines in cluster) and each cluster calls cvGetHaarTrainingDataFromBG on it’s own negative set, then clusters outputs are joined together.

Computation time was accelerated much, instead of 6 days, cascade was generated within 21 hours! With perfomance tool we compare our cascade with one, generated on single machine with the same training set. Results are very similar.

Hi, Vasiliy Mun

I was so impressed your great jobs in doing Parallel world of OpenCV (HaarTraining). could u describe OpenMP configurarion in large clustes?

Hi, the matter is that I did not use OpenMP, but MPI e.g.

I build code with OpenMP disabled, but with MPI API calls. Then code was just build for linux and launched on cluster using our MPI framework.

If you are interested in OpenMP, look here http://msdn.microsoft.com/en-us/library/tt15eb9t.aspx

Hi. I did not quite understand. “Build code with MPI API calls”? Did you change source code, or do you have some magic openmp->mpi -converter?

Hi! I changed source code and call MPI to start parallel, end parallel or synch data.

Hi,

Great Job!!

I am interested in knowing more about MPI API calls you have mentioned in your blog. Where can i find the reference for “MPI”?

Regards Manisha.

Hi, we used standart MPICH2 http://www.mcs.anl.gov/research/projects/mpich2/

1) you need MPI lib for visual studio 2005 for example to build code.

2) Then you need cluster based on MPI.

Hi, I am interested in haar classifiers, however, I am not proficient in opencv and I only know MATLAB. Can you suggest some books or websites that contain material on training of haar classifiers using MATLAB

Hello Mukund,

There are some examples about this topic, please check: http://www.mathworks.com/matlabcentral/fx_files/24092/3/content/html/demo_haar.html

and http://www.mathworks.com/matlabcentral/fileexchange/4619

Also there are a way to call external C/C++ functions from Matlab, check http://www.mathworks.com it has some examples how to use OpenCV functions from Matlab.

Also I have found some useful functions at http://www.mathworks.com/matlabcentral/fileexchange/5104

Hi John,

Thanks a lot, I found your link very useful for my project. I am trying to implement the haar classifier on an FPGA and I am having difficulties in fixing the number of classifiers. I found that most boost algorithms generate 1000’s of haar features. Are there any other algorithms which use fewer number of classifiers without compromising on detection rates?. I am aiming for detection rates of 80 to 85% and false positive rates of 5 to 10%

You can use PCA method to get features and then use another binary classificator like NBC.

http://www.codeproject.com/KB/audio-video/haar_detection.aspx

Hi, in 2007 I was built cascade classifier using Intel pentium 4 600 Mz, and we have known it is paintful ( to get satisfied result need more training and took many days even weeks ). Next year I plan to bring haartraining in distributed computing for my master’s thesis. Could you give me some guidance to it (feasibility, where starting point, reference paper etc). Thanks

Hi Rudy,

This arctilce has enough details. Can you please specify your question with more details? What particular more guidance you need?

->feasibility,

[Aleksey] Yes, it is feasible as per this article.

->where starting point,

[Aleksey] MPI as stated with this article.

->reference paper etc).

[Aleksey] There is no such, we didn’t find and thus write this one.

Unfortunaly we cannot share our code but you can see “about” page and contact to our marketing team for getting source code or asking our help.

Hi Would it be possible to get some assistance or code for haartraining project with the changes mentioned for MPI as we are planning to use an scalable HPC linux cluster service to process a very large number of very complex cascades.

Any help would be greatly appreciated

Hi Nick,

We could answer on your specific questions. Our management doesn’t allow to share the code here on blog. If you would like to have our code, please see “about” page regarding details how to contact to our marketing team.

Aleksey

Hi, Thankyou.

I read that on a previous post after I made the post. I have already sent a request on the about page a few hours ago as per the instructions and look forward to hearing a reply.

Nick

Great!

[…] Yes, one of possible ways is to use parallel programming. We have realized OpenCV haartraining using MPI for linux cluster. You can read it here […]

Hi,

nice article and interesting idea.

I think it would be interesting to see the results of this experiment, reduced computing time is just one of the results, but what about performance?

Could you please post the results given by the performance tool comparing your cascade with the one generated with a single computer?

Thank you!

Best regards,

Victor

Hi Aleksey,

I’m interested in part “Results are very similar” – were there any significant differences in generated cascade?

Regards,

Vanja

Hi There,

I would like to know if anyone has a precompiled haartraining.exe with OpenMP support enabled?

I am using Visual Studio 2010 and have been unable to compile the version shipped with opencv 2.0 with OpenMP support. It would be great if someone could give me some advice on how to compile with OpenMP (I know how you select the option in Visual Studio) But I am receiving a lot of errors when trying to build the solution. Preferably if someone already has a precompiled binary they would be willing to share I would be grateful or alternatively if someone has a VS 2008 / 2010 solution they could share that would also be great.

Thanks,

Sam.

[…] proses training untuk menghasilkan classifier deteksi suatu obyek dalam citra ( idenya sama seperti ini ), namun kendalanya adalah dari sisi hardware ( kampus saya ga ada fasilitasnya, disamping itu […]

Aleksey Kodubets, was it necessary to create new MPI datatypes and send them using the MPI_Send function the to processes? Just curiosity: How much time has you spend in this project of paralleling OpenCV Haartraining?

So you just replace the OpenMP calls with the OpenMPI calls? Right?

hi,

how to install or openmp.please help me……am doing face detection project

I am doing image effect project with openmp but i have opencv source code . How can i change opencv source code to openmp?

Thanks for your jobs!.

Could you help me how to split negative samples into N parts?

I still don’t understand how to speed up the training. Thanks a lot!